Designing the Prototype

1) The app development began with collecting data samples from the multispectral satellite Sentinel 2 through Google Earth Pro. Samples collected from 6 months before and 6 months after the cyclone allowed for temporal analysis of the impacts of both Cyclone Amphan and Cyclone Mocha.

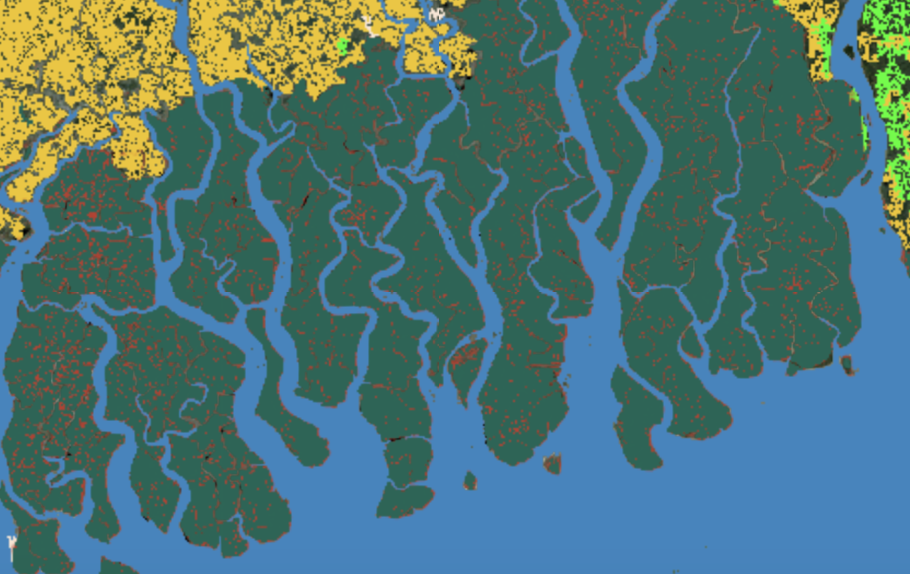

2) After atmospherically correcting the images for haze and other impurities and using a cloud masking algorithm to remove excessive cloud cover, the Random Forest classifier, a machine learning-based supervised classification algorithm, was used to identify mangroves both before and after the cyclone. Marked data samples helped the algorithm correlate spectral signatures of image pixels with different land cover classes. Seventy percent of the data served as training samples utilizing various vegetation indices, while 30% remained for testing.

3) Once the accuracy met satisfactory levels, the data was clipped using the Global Mangrove Watch 3.0 (2020) layer to calculate mangrove extent changes. Additional layers, including the Forest Proximate People (FPP) population density data, were clipped to the Sundarbans loss area, identifying community densities within a 1-kilometer radius of lost mangroves as the most susceptible to cyclone impacts.

These results were integrated into the Earth Engine App, making the Vision of Grove app. Click below to watch a demo of the app.